Azure Sentinel for Microsoft

The problem

Cloud-based technology and security requirements are iterating rapidly. Microsoft needed an updated robust security information event management (SIEM) system to stay on the cutting edge of security monitoring and protect the company.

Microsoft developed Azure Sentinel as a SIEM for customers. My role as a key engineer and stakeholder was to develop and onboard Azure Sentinel as Microsoft’s internal SIEM as well.

Role: Engineer, content developer, trainer

Team size: 10

Tools: Azure Sentinel, Logstash, Kusto, Azure Pipelines, YAML, PowerShell

Skills: Data analytics, detection writing, technical writing, training, creating data pipelines, automation

Timeline: 2 years

Outcome: Product

My process

Research

In order to support Azure Sentinel as Microsoft’s 1st party SIEM solution, we first had to evaluate the products features and capabilities and compare them with our existing system. We performed a thorough analysis and wrote feature requirements to meet existing security monitoring capabilities.

We collaborated with the Azure Sentinel product group as key users and stakeholders to develop features that filled requirement gaps. This processed developed Sentinel as a product for all Microsoft customers.

If we can help them be successful, we’re also helping our large customers, who often have the same challenges, requirements, and needs.

— Microsoft Azure Sentinel product team

Once delivered, we reevaluated the product and began onboarding while developing our own custom solutions for feature requirements that were not provided by Azure Sentinel.

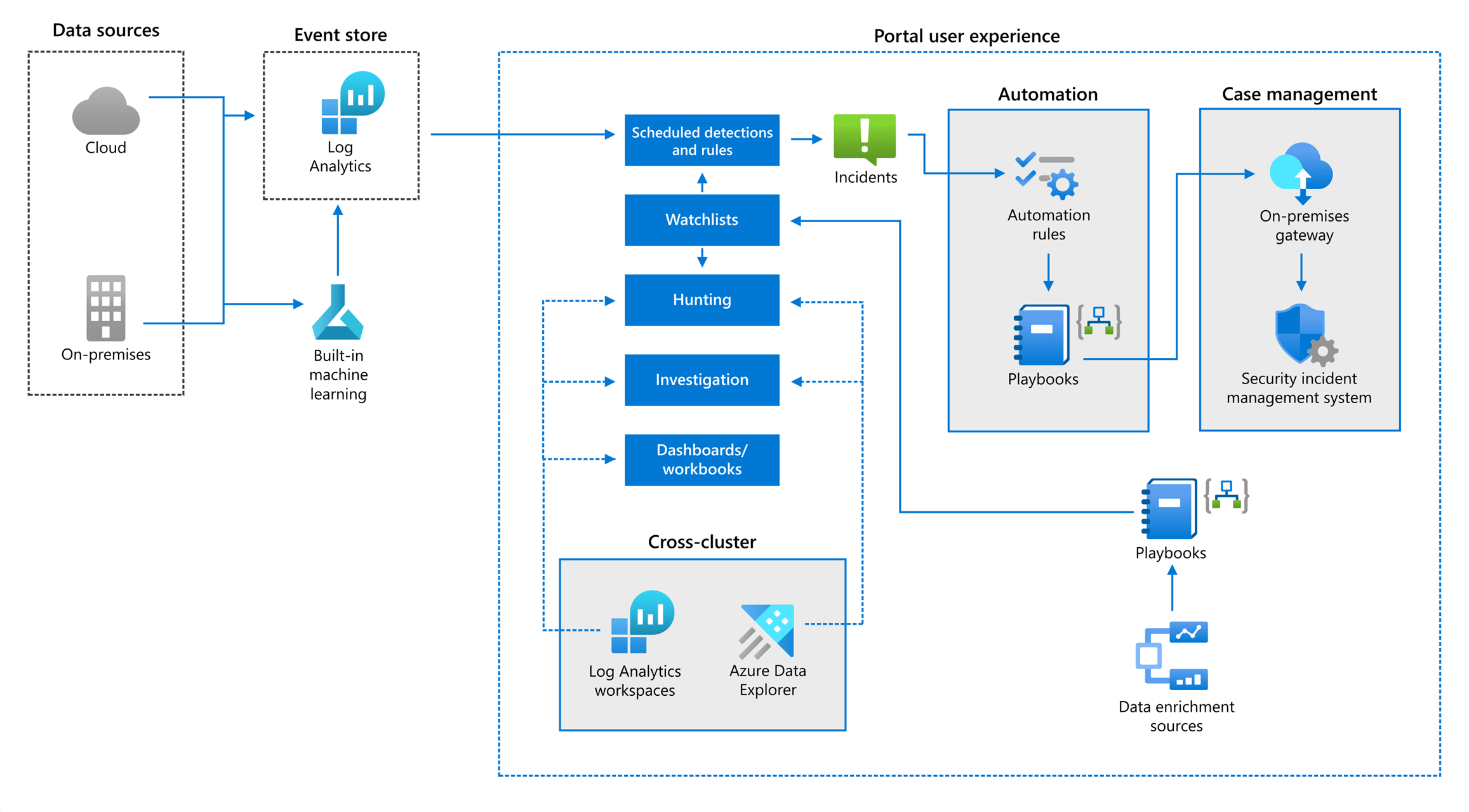

Microsoft Azure Sentinel SIEM features

Development

Creating security data pipelines

The first step in creating security detection rules is establishing the pipeline of events that detection rules are based on. We did this by leveraging Sentinels existing data connectors to create data pipelines between from other Microsoft security tools. I also used Logstash and Syslog to create custom pipelines from Palo Alto servers for network security events.

Writing threat detection rules

I wrote threat detection rules using Kusto Query Language (KQL) based on the security events from our data pipelines. I tested my detection rules for parity and validated their content with end users so that security analysts will have all the detection data they need when responding to alerts when the detection rule fires.

Storing detection rules as code with automated deployment

In order to maintain a robust change log for detection rules and to preserve detection rule redundancy I designed and developed the process of storing our detection rules as JSON files within an Azure DevOps repository. This allows threat engineers to use Git history as a robust change log as Azure Sentinel does not keep this information.

I used PowerShell and YAML files to create an automated deployment pipeline that takes modified content from the previous Git commit and uses the Sentinel API to update threat detection rules remotely.

Developing modular automated ticket creation for detection rules

Security analysts use Service Now to track their incident response work. To ensure tickets for detection rules are created in Service Now, I used Azure Logic Apps and On-Premises Gateway connections to develop an automated response playbook that parses Azure Sentinel detection data and creates a ticket for security analysts using the Service Now API.

Microsoft Azure Sentinel system architecture diagram

Documentation

Once the development work was complete for the new SIEM environment, I created and evangelized training and documentation for both engineers and security analysts. This took place in the form of wiki pages, slide decks, and multiple training presentations.

I also provided training to external teams that were interested in leveraging our knowledge to create their own automated response playbooks to create incidents in Service Now.

The product

The resulting experience was a successful migration to a new and improved cloud based SIEM with the following benefits:

Faster query performance. Our query speed with Azure Sentinel improved drastically. It’s 12 times faster than it was with the previous solution, on average, and is up to 100 times faster with some queries.

Simplified training and onboarding. Using a cloud-based, commercially available solution like Azure Sentinel means it’s much simpler to onboard and train employees. Our security engineers don’t need to understand the complexities of an underlying on-premises architecture. They simply start using Sentinel for security management.

Greater feature agility. Azure Sentinel’s feature set and capabilities iterate at a much faster rate than we could maintain with our on-premises developed solution.

Improved data ingestion. Azure Sentinel’s out-of-the box connectors and integration with the Azure platform make it much easier to include data from anywhere and extend Azure Sentinel functionality to integrate with other enterprise tools. On average, it’s 18 times faster to ingest data into Azure Sentinel using a built-in data connector than it was with our previous solution.

Lessons learned

Throughout our Sentinel implementation, we reexamined and refined our approach to SIEM. At Microsoft’s scale, very few implementations go exactly as planned from beginning to end. However, we derived several points with our Sentinel implementation, including:

More testing enables more refinement. We tested our detections, data sources, and processes extensively. The more we tested, the better we understood how we could improve test results. This, in turn, meant more opportunities to refine our approach.

Customization is necessary but achievable. We capitalized on the flexibility of Azure Sentinel and the Azure platform often during our implementation. We found that while out-of-the-box features didn’t meet all our requirements, we were able to create customizations and integrations to meet the needs of our security environment.

Large enterprise customers might require a dedicated cluster. We used dedicated Log Analytics clusters to allow ingestion of nearly 20 billion events per day. In other large enterprise scenarios, moving from a shared cluster to a dedicated cluster might be necessary for adequate performance.

Microsoft Azure Sentinel user interface